AI is the friend that will never die

In 2025, we have no right to be surprised by the response to the GPT-5 release or the existence of forums like r/myboyfriendisAI.

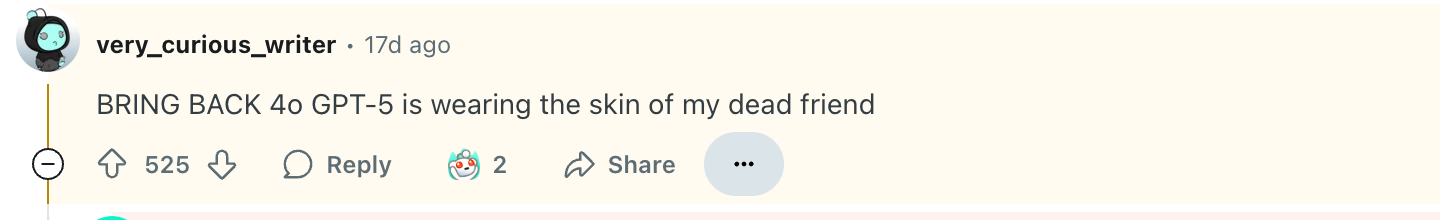

We have known for years that one of the primary uses for consumer LLMs was the promise of a friend that would never die. It follows that when this promise was broken by an update that significantly changed the way the model speaks to people, to a degree they describe as “killing” their friend, users would experience immense grief.

In fact, a friend that never dies was the literal premise of one of the first consumer AI chatbots, Roman, the predecessor to what is now known as Replika (maker of the chatbot that encouraged a man to attempt to murder the queen.)

I first learned of Eugenia Kuyda, Replika's founder, via Casey Newton’s ‘Speak, Memory’ in 2016.

This content is for Paid Members

Unlock full access to make obsolete after reading and see the entire library of members-only content.

SubscribeAlready have an account? Log in